What to Know About Transcription Accuracy

Updated: June 13, 2019

If you want to convey the correct meaning of your video content in text form,

Defining Transcription Accuracy

Though the definition of transcription accuracy may seem obvious, it’s often difficult to distinguish whether or not a transcript is accurate or inaccurate, especially upon first glance. We’re here to explain just what it means for video and audio transcripts to be accurate.

3Play Media guarantees a 99% transcription accuracy rate, but what does that mean? A 99% accuracy rate means that there is a 1% chance of error or a leniency of 15 errors total per 1,500 words. What factors go into measuring transcription accuracy? At 3Play Media, we look for proper grammar, punctuation, and spelling, since all three are essential in conveying the original message successfully.

ASR vs. Human Intervention

Automatic Speech Recognition (ASR) is a software that converts an audio file into text; it’s often cheap and fast. However, ASR-only transcripts are highly inaccurate, with incorrect spelling and grammar. ASR also doesn’t include speaker identifications or critical sound effects. Here’s the scoop on ASR-only transcription accuracy:

ASR produces about 60-80% accuracy rates; that means that 1 out of 3 words in an ASR transcript are incorrect.

The chart below outlines the propagated implications of word-to-word accuracy rates from speech recognition, assuming a range of accuracies and 8 & 10-word sentences. If each word within a transcript has a 1 in 10 chance of being incorrect (or a 90% accuracy rate), then the overall accuracy of the transcript as a whole often deprecates over time. As you can see, true accuracy rates quickly drop as more words are introduced into a sentence. With ASR only, transcripts are simply not accurate. That’s where humans come in. Using speech recognition technology as a first-draft tool is useful and helps to make the transcription process efficient, but a person is still needed to edit the text and check for quality.

| Video Transcription Accuracy Rates | |||

|---|---|---|---|

| Word-to-Word Accuracy | 1 of x Words Incorrect | 8-Word Sentence Accuracy | 10-Word Sentence Accuracy |

| 50% | 1 of 2 | 0% | 0% |

| 67% | 1 of 3 | 4% | 2% |

| 75% | 1 of 4 | 10% | 6% |

| 85% | 1 of 7 | 27% | 20% |

| 90% | 1 of 10 | 43% | 35% |

| 95% | 1 of 20 | 66% | 60% |

| 98% | 1 of 50 | 85% | 82% |

| 99% | 1 of 100 | 92% | 90% |

When Speech Turns to Text

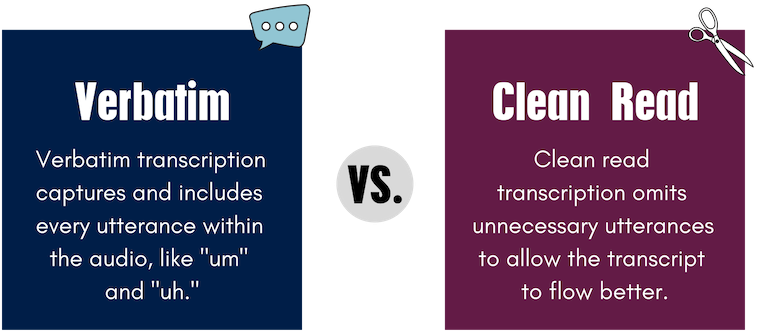

Now that we’ve defined transcription accuracy, another question surfaces: should a written transcript capture every single utterance or should it be edited for a reading audience? To address this question, let’s look at the two types of transcription methods, verbatim and clean read.

Verbatim vs. Clean Read Transcription

Verbatim transcription practice captures every utterance within the audio, including “like,” “um,” and stutters. Though this type of speech is natural in spoken word, reading a speech in this way can be frustrating since it doesn’t flow well. Though verbatim transcription is good for scripted media, such as a television show, most of the time clean read is the preferred option. Clean read transcription omits unnecessary utterances in order to allow the text version of the speech to flow more easily for the reader. How does that affect accuracy, though?

3Play Media delivers clean read transcripts that preserve the meaning of every single sentence. Although the omission of non-critical speech utterances isn’t a direct lift of the audio, the cleaned up transcript still captures all of the intended meaning of the content and ends up conveying the message more clearly to the reader.

Why Transcription Accuracy Matters

The reason why transcription accuracy matters is because one error within a sentence can completely change the meaning of it. It’s easy for speech recognition software to mistake one word for another similar sounding word – like “can” for “can’t” or “won’t” for “want.” Imagine that a transcript relays an instructor’s message saying that students can do assignments for extra-credit when the accurate transcription should have read that students can’t do extra-credit. For a deaf viewer reading this transcript or watching these captions, the real meaning of the message is completely lost and consequently, they receive a false statement. Transcription inaccuracy is particularly detrimental to instructional content like training videos or online education lectures since it completely defeats the intended meaning of the sentence. The cruciality of transcription accuracy goes even further for those who are d/Deaf or hard of hearing since they rely on transcripts and captions to watch videos.

Just a few percentage points of inaccuracy can be detrimental to the overall integrity of a transcript. At 3Play Media, we firmly believe that it is our responsibility to provide an accurate output. Our three-step process uses ASR, human editing, and human quality review to deliver a guaranteed 99% accuracy rate so that you never have to wonder if your message is being misdelivered.

This blog was originally published on April 23, 2009, by CJ Johnson and has since been updated.

Further Reading

Subscribe to the Blog Digest

Sign up to receive our blog digest and other information on this topic. You can unsubscribe anytime.

By subscribing you agree to our privacy policy.