Artificial Intelligence Is Good, but Is It Good Enough for Captions?

Updated: August 28, 2019

“Artificial intelligence (AI) makes it possible for machines to learn from experience, adjust to new inputs, and perform human-like tasks.” In other words, rather than programming machines with a single rule for output, they are taught to recognize patterns and then utilize those patterns to make decisions somewhat like a human might.

In this post, we will take a look at the implications of artificial intelligence and machine learning for automatic speech recognition, and how this may be used for automatic captioning.

The Current State of AI

In recent years we’ve seen the rise of digital assistants with Siri and Alexa, chatbots, personalized search results (that are sometimes so good it’s creepy!) and increased reliance on speech recognition to dictate text messages, emails, and so on. But what does the current state of AI mean for automatic speech recognition (ASR?)

Understanding AI, ML, and ASR

Before we discuss the relationship between artificial intelligence, machine learning, and automatic speech recognition, let’s take a look at what each of these terms means:

- Artificial Intelligence (AI): refers to intelligence demonstrated by machines

- Machine Learning (ML): allows machines to learn outputs from previous experience

- Automated Speech Recognition (ASR): converts spoken words into computerized text

If we put these all together, it’s easier to understand that AI is the overarching discipline that refers to making machines “smart.” Machine Learning refers to systems that can learn by themselves from experience. ML is not the same as AI, but rather is a subset of AI. Most AI work now involves ML because intelligent behavior requires considerable knowledge, and learning is the easiest way to get that knowledge.

One area of high achievement is ASR – automatically transcribing voice recordings into the written word. In the rest of this post, we will take a look at the applications in which ASR has been used (and how well) including – of course – for captioning.

Applications of ASR

For ASR to work, the machine must be programmed to predict and deliver all possible outcomes. We have seen successes in specific applications where the input space is very narrowly constrained. For example, digital assistants like Siri and Alexa work sufficiently well, as the vocab size is task and command. In other applications, larger vocabulary sizes have posed a challenge.

ASR for Captioning

Before we talk about captioning, think for a moment about self-driving vehicles. The notable failures in automated driving have been due precisely to unexpected visual input, which is also what happened with Google’s infamous image tagging error. If you think about programming a car to predict all of the possible situations it might encounter on the road and to adjust to those situations, it feels nearly impossible. The same is true for ASR. As the vocabulary size grows, the task gets more complicated. While there have been many new improvements in ASR, the captioning task is much more complicated than the tasks where the most improvements have been made.

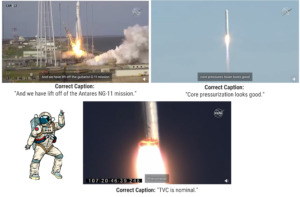

We recently saw an example from the NASA launch of why automatic captions just don’t cut it. The images below show the incorrect automatic captions, along with what was actually said. While we agree the sight is phenomenal, inaccurate captions are certainly not!

Why Are ASR Capabilities so Different for Captioning?

When relying solely on ASR technology, the accuracy rates are pretty abysmal – but why? Captioning is much more complicated than many other applications of ASR. Captioning is primarily characterized by long-form content, often the speaker is unknown, and it’s critical to transcribe every spoken word.

When it comes to captioning, some of the most common causes of ASR errors include:

Speaker labels

- Punctuation, grammar, and numbers

- Non-speech elements

- [INAUDIBLE] tags

- Multiple speakers or overlapping speech

- Background noise or poor audio quality

- False starts

- Acoustic error

ASR technology is also prone to fail on small “function” words which are important in conveying meaning in speech. For example, take a look at the two sentences below:

“I didn’t want to do that exercise.” vs. “I did want to do that exercise.”

This example is a very typical ASR error. However, the meaning is completely reversed. It is sporadic for a human – especially a trained editor – to make such an error, as they will use the context to “fill in” the correct meaning in spite of any noise that may have been responsible for the ASR failure. Many of the above challenges are not being addressed by current technology or by current research.

In cases of perfect audio conditions, we have seen ASR technology produce around 80% accurate captions, at best. Perfect audio conditions, however, are rare.

We Still Need Humans

As the saying goes, “If you want something done right, do it yourself.” (Or at the very least – have a human do it. 🤷♀️) All jokes aside, when it comes to purely automated captioning solutions, there are no solutions on the horizon that will address all of the challenges posed by the captioning task. Technology just doesn’t have the same capability that humans do to understand nuances or discern unclear words from context. Therefore, we should be careful about generalizing from the success of automated approaches to any particular business problem, such as captioning.

ASR can play an important part in captioning when used in conjunction with human editors. At 3Play Media, we use a 3-step process to provide a high-quality, yet cost-effective captioning solution.

- First, your video will go through ASR technology to produce a rough draft.

- Next, a human editor will clean up the rough draft using our proprietary software.

- Finally, a quality assurance manager will conduct a final review to ensure 99% accuracy.

At 3Play, we feel confident that human editors will remain a necessary component of producing high-quality captions for the foreseeable future.

—

Get started with the highest quality captions in the industry or learn more about 3Play Media’s captioning solution today.

Further Reading

Subscribe to the Blog Digest

Sign up to receive our blog digest and other information on this topic. You can unsubscribe anytime.

By subscribing you agree to our privacy policy.

Speaker labels

Speaker labels![3Play Media. ARTIFICIAL INTELLIGENCE IS GOOD...BUT IS IT GOOD ENOUGH FOR CAPTIONS? The Definitions: Artificial intelligence allows machines to recognize patterns and utilize those patterns to make decisions, rather than be programmed with a single rule for output. AI, ML & ASR - Oh My! Artificial Intelligence (AI): refers to intelligence demonstrated by machines. Machine Learning (ML): allows machines to learn outputs from previous experience. Automated Speech Recognition (ASR): converts spoken words into computerized text. Applications: Siri, Alexa. ASR For Captioning: Captioning is much more complicated than many other applications of ASR. Why? Long-form content, Unknown speaker, Critical to transcribe every spoken word. COMMON CAUSES OF ASR ERRORS: Word Error Rate: Speaker labels, Punctuation, grammar, numbers, Relevant non-speech elements, No [INAUDIBLE] tags. Formatting Error Rate: Multiple speakers, Overlapping speech, Background noise, Poor audio quality, False starts, Acoustic errors, “Function" words. We Still Need Humans: Technology doesn’t have the same capability that humans do to understand nuances or context, but it can play a part in the captioning process if humans are involved, too. 3Play Process: Our 3-step process leverages technology and human editors: 1) Your video goes through ASR technology to produce a rough draft. 2) A certified human editor will edit and clean up the rough draft. 3) A quality assurance manager will conduct a final review for 99% accuracy. LEARN MORE AT WWW.3PLAYMEDIA.COM | INFO@3PLAYMEDIA.COM](https://www.3playmedia.com/wp-content/uploads/ai-asr-new-120x300.jpg)